A couple of weeks ago a new version of Splunk was released: 9.2.2, this included various CVE resolutions (URL) and many of our clients have already rapidly responded and upgraded their version of Splunk. Upgrading is a quick and fairly low effort, low risk exercise so long as you haven't allowed tech-debt to creep into your infra. However once your architecture scales to a clustered environment such as a C1, C13 or even Multi-site instance then rather more effort is needed to upgrade.

Working with a client recently they had over 40x Splunk Enterprise servers that required upgrading which included multiple Search Head Clusters (SHC's). On the clusters were a variety of large Key Value Stores (KV stores) which serve as small database type constructs using MongoDB. These are important to backup just prior to upgrade works taking place, clients don't want stale data here and so a just-in-time backup is really valuable to have.

KV store backups at scale

The Splunk official upgrade docs (URL) tells you to backup the KV store and there is a detailed page with commands to verify the status and restore process (URL), however there is no means offered to do this at scale, which in the case of this particular client was multiple clusters, multiple apps and multiple KV stores. All of this builds up to an 'N*' scaling challenge.

Step 1: CLI SPL search to obtain a list by app

From the linux command line (wait, you are running linux right?) of your Splunk Enterprise server you can run a Splunk SPL search to gather all the apps that have a KV store. In some cases you may want to specifically exclude generic or heavy use apps such as Security Essentials or Python upgrade readiness but it all depends on the client need. Trivia, it wasn't until Splunk version 4.x that there was a GUI, prior to that all search was done via the CLI and this has largely been forgotten that you can even do it this way, it helps us in this instance by removing manual process which are more likely to create errors.

sudo -H -u splunk /opt/splunk/bin/splunk search '

| rest /servicesNS/-/-/data/transforms/lookups splunk_server=local

| search type=kvstore | fields eai:appName, title, collection, id

| rename eaiappName as app

| search NOT app IN (Splunk_Security_Essentials, python_upgrade_readiness_app)

| eval list =(app+","+collection)

| fields list' > ~/kvlist-raw.txt

# Note the end of the command write to output to: 'kvlist-raw.txt'

Step 2: Create a NetRC file

We're going to need to issue a CURL command for each KV store that we need to backup, this was over 30 individual items for my client in this instance and the intention is speed; if we were to manually enter a password for each connection it would sub-optimal. So we can use a netrc file during the works which should be deleted immediately thereafter. See the CURL docs (URL).

In this instance the machine is set to 'localhost', this must be placed on one of the Search Heads to function, otherwise it will fail to connect to the endpoint.

#!bin/bash

vi ~/.netrc

machine localhost

login

password

# Save and exit

chmod 400 ~/.netrc

Step 3: Clean-up the KV store list

We now need to use REGEX to extract cleanly the app and the list of KV stores we wish to input to our automation script, without this phase we would generate errors.

cat kvlist-raw.txt | egrep '\w+,\w+' | sort | uniq > kvlist-clean.txt

rm kvlist-raw.txt

Step 4: Create the automation script

This is the key part of our process and incorporates standard CURL retries, there are lots of options which can be edited and you can reference the docs to adjust to your target environment (URL).

Obviously set the filename variable and 'splunkhome' path per the deployment.

touch kvstorebackup 0700

vi kvstorebackup

#!/bin/bash

filename='Splunk-x.x.x-upgrade-'$(date +%F)

splunkhome='/opt/splunk/bin'

total=$(cat kvlist-clean.txt | wc -l)

echo "# Splunk KVstore backup script, there are a $total of KV's to backup:" > backup

while IFS="," read -r value1 value2 remainder

do

echo "curl --retry 20 --retry-delay 30 --retry-max-time 600 -n -k -sS -X POST HTTPS://localhost:8089/services/kvstore/backup/create -d archiveName=$filename-$value1-$value2&appName=$value1&collectionName=$value2'" >> backup

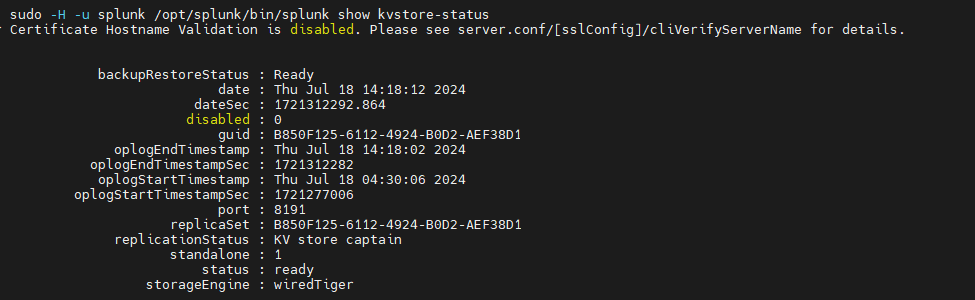

echo 'sudo -H -u splunk '$splunkhome'/splunk show kvstore-status | grep backupRestoreStatus | grep backupRestoreStatus | cut -d ":" -f 2 | cut -d " " -f 2' >> backup

echo 'sleep 5s' >> backup

done

Step 5: Generate the backup file and run it

Great, so the file 'backup' has been created with a list of the target environments app and KVstores, you need to check the script, run it and then the file will initiate the process with Splunk. You won't need a password at this point because you stored it in Netrc.

Go make a brew, this bit can take at least 20-30mins in larger environments. It is also carried out in series and not parallel unfortunately.

# Generate the backup file:

./kvstorebackup < kvlist-clean.txt

# Check the backup file:

cat backup

# Check the KVstore status:

sudo -H -u splunk /opt/splunk/bin/splunk show kvstore-status | grep backupRestoreStatus

# Execute the backup script

chmod 700 backup

./backup

Step 6: Measure twice, cut once

Great, so your backups should have completed, but you must go and check them to make sure they make sense, there are the right number of them and that it looks like you'd have a way out if the upgrade goes wrong.

# Check that the backups are in place:

sudo -iu splunk

ls /opt/splunk/var/lib/splunk/kvstorebackup

Git / Feedback

I hope this script helps you and that it simplifies your Splunk upgrade, we'd love to hear any feedback if it was useful to you. At some point in the future we may publish it to the Splunkbase and Git.

Any e-mails to:

-